The discourse surrounding artificial intelligence apps, such as the infamous ChatGPT, is chaotic, to say the least. One moment it’s the user’s best friend hailed as the revolutionary new way to boost productivity, spark creativity and save workers loads of time. The next minute, sentiment shifts and we move towards a futuristic man vs. human dialogue. Fears about redundancy in the workplace creep in, as do (justifiable) concerns regarding the likes of security and how our data will be protected.

We weighed up the pros and cons of ChatGPT in our recent blog and outlined everything you need to know about the platform as well.

However, the scope changes daily - and, trust us, we’re watching these developments like a hawk!

From major privacy breaches to big new software developments, it’s all happening in the world of AI. These are all the latest developments you need to know.

Privacy concerns as user prompts leaked

A recent ChatGPT glitch has allowed some users to see the titles of other users’ conversations. Individuals on Reddit and Twitter shared images of chat histories not belonging to them. One user on Reddit shared a photo of their chat history including titles like "Chinese Socialism Development", as well as conversations in Mandarin.

The company admits the breach is “significant” and the glitch has now been fixed.

What’s concerning about the glitch is that it indicates that OpenAI has access to user chats.

While the company’s privacy policy does indicate that user data, including prompts and responses, may be used to inform model developments, it also states that all personally identifiable information will be removed as that data is collected.

This latest privacy breach questions whether this is actually the case.

Personal privacy concerns are one thing, but this data breach opens up an entirely new can of worms for proprietors.

Facebook accounts hacked

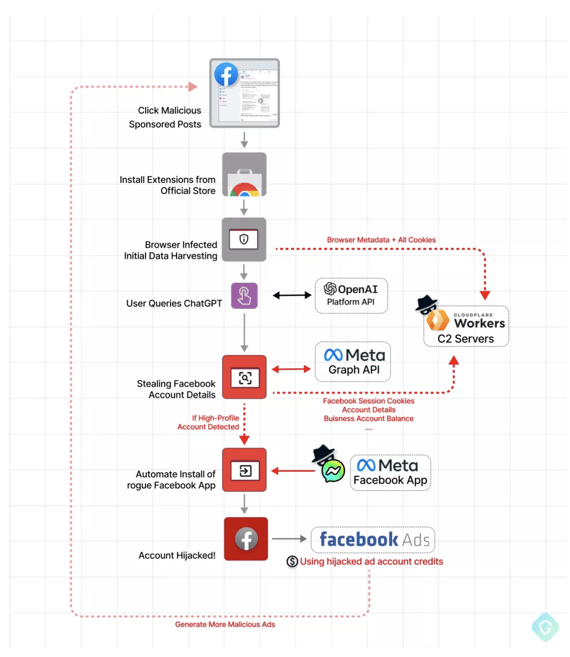

More than 2,000 people have unknowingly put their Facebook accounts at risk after downloading the “Quick access to ChatGPT” Chrome extension from the Google Play app store. Business accounts have also been compromised.

The extension claimed to offer users a quick way to interact with the ChatGPT chatbot. Instead, it harvested information from the browser, stole cookies and installed malware enabling access to the user’s Facebook account.

These ‘threat actors’ are likely to then use collected data for financial gain, selling it to third parties.

This browser extension is just one of many threats that have emerged since ChatGPT’s boom in popularity. Fake ChatGPT landing pages, ChatGPT-themed phishing emails and fake apps purporting to be the platform in order to spread malware are all scams to be aware of.

Informative image from Guardio about how malicious actors steal your data (Image credit: Guardio)

Some alarming report findings

Data security firm Cyberhaven has released the findings of a new report, which indicate large language models (LLMs) such as ChatGPT pose some major security threats for companies.

According to the firm, 3.1% of the 1.6 million workers at its client companies have copied and pasted confidential information, client data, source code or regulated information into LLMs.

The report indicates that companies are leaking confidential material to ChatGPT hundreds of times per week.

Examples of confidential information that is being copied and pasted into LLMs include:

- Sensitive client data such as patient records

- Strategy documents not intended for external viewing

- Project planning files

- Source code

- Proprietary, trade or secret information

There are concerns that AI services could incorporate this sensitive data into their models and that this information could then enter the public domain.

As a result, cautious employers are being urged to review their confidentiality agreements and policies, with reference to how employees are permitted to use AI technologies in the workplace.

Also in the news...

It's not all bad! While rampant, privacy concerns are not the only topic sparking AI-related conversation in the news right now. There have been some more promising updates about the future of OpenAI's flagship application, as new improvements are made, and partnerships struck.

OpenAI launches updated model

OpenAI, the San Francisco AI lab founded by Elon Musk and Sam Altman behind ChatGPT, has launched GPT-4.

GPT-4 is the new and improved version of GPT-3. The latter is currently available on the free version of ChatGPT.

According to OpenAI, GPT-4 is “multimodal” in that it can accept image and text inputs and emit text outputs. It claims to be more creative, less biased and able to pick up nuance better. Up to 25,000 words can be processed - around eight times as many GPT-3.

Users with a free account will continue to access the preexisting GPT-3 version for now.

To unlock GPT-4, it is necessary to sign up for ChatGPT Plus, a paid-for monthly subscription.

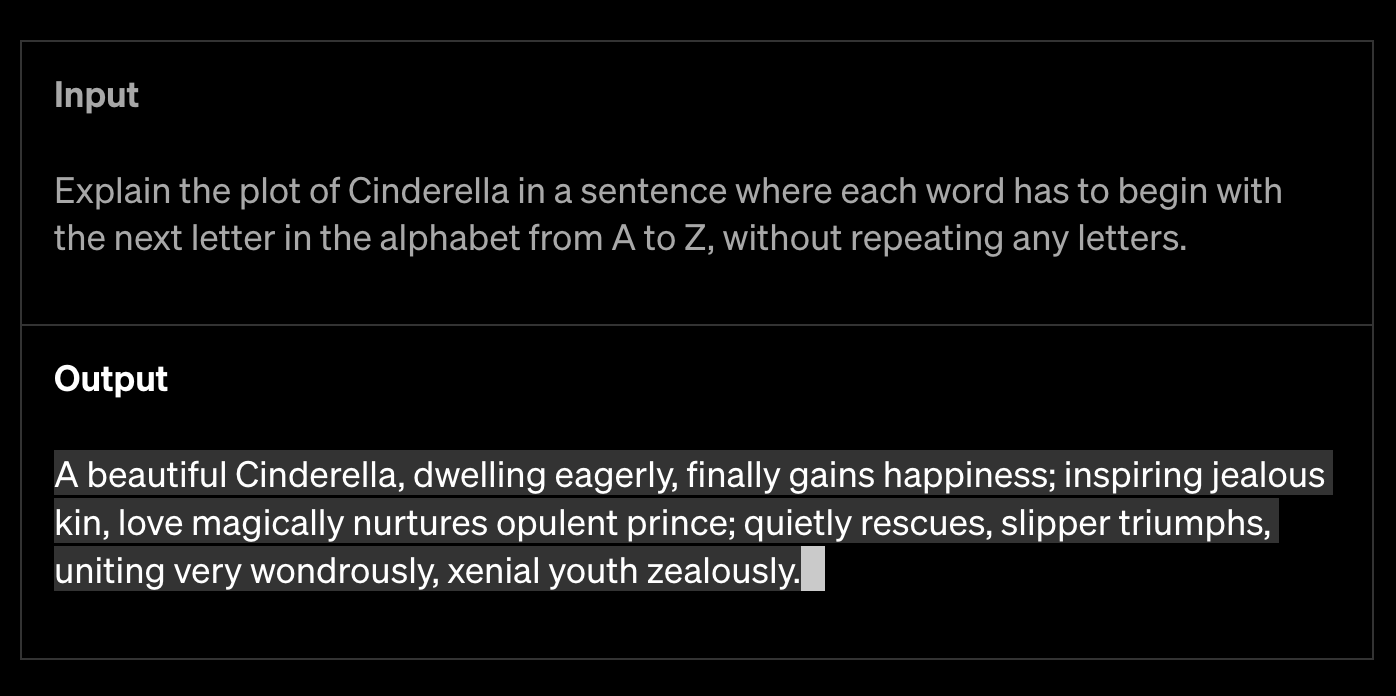

An example of how GPT-4 can produce creative output. Image credit: OpenAI

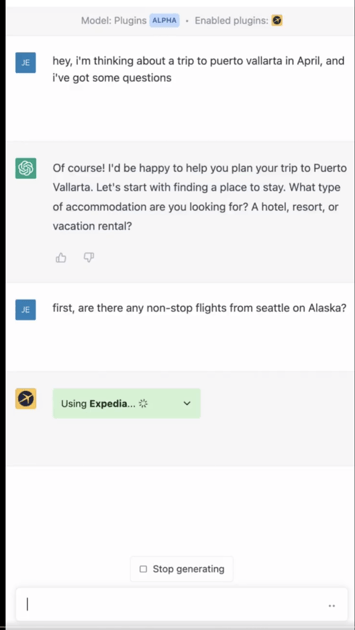

Partnership with travel giants announced

ChatGPT plugins will soon be available in collaboration with travel firms Expedia and Kayak. The plugins will enable the AI to act as a travel agent or personal assistant as you plan your holidays.

The plugins work within the existing ChatGPT platform. Users will be able to type prompts such as °Where can I fly in Australia for under $200 in December” and receive personalised travel recommendations.

Watch this space as the plugins will be rolled out gradually. They will initially be available to ChatGPT Plus users only.

Image credit: Expedia Group

Summing it all up

Clearly, ChatGPT is not going anywhere. In fact, as OpenAI launches new and improved versions and partners with third parties to create an even richer user experience, the technology will no doubt become more forceful than ever.

However, there are real and pressing security issues that individuals and companies must address.

We need to use the resources available to us wisely.

Now is the time to educate yourself, and your employees, about safe AI usage and take tangible steps to safeguard confidential information.

This is a whole new tech era. Get smart about it.

How can Diamond IT help?

Diamond IT will work with you to ensure your staff are aware of the types of ever-evolving cyber threats and equip them with tools and a high level of cyber and data awareness and comprehension.

Our online Cyber Security Staff Awareness Training and Cyber Security Health Check can have an immediate impact on the strength of your security.

Our Business Technology Consultants are specialists in improving your internal cyber security. We offer a range of security solutions to ensure your employees and business remains secure, with many included in our Managed Services Agreements including:

- Multi-Factor Authentication

- Diamond Management Systems and Patching

- Cyber Security Awareness Training

- Cyber and Data Breach Consulting and Forensic Analysis

- Disaster Recovery (DR) Planning

If you need advice on how you can ensure your cyber security strategy is fit for purpose, our team of cyber security experts are ready to help. Contact our team on 1300 307 907 today.